Starting from java 1.5 onwards there is a java advanced locking mechanism introduced in java with which we can be able to restrict and control the access to the shared resources in a more flexible way in addition to the performance improvement. Locking mechanism having few sets of implementations like ReentrantLock, ReentrantReadWriteLocketc.

Lock interface provided by “java.util.concurrent.locks”package provides thread synchronization mechanism in a more advanced and controlled way compare to our synchronized blocks. New Locking mechanism provides us various lock methods and also provides more options to gain more control or to have a restrictive access on the shared resources.

Locking Strategies

Here, I am going to write about different locking strategies when there are multiple shared resources in the application. Also, I am going to mention about deadlock, when it happens and how to avoid it.

When we write multi-threaded application, we need to make a choice between fine-grained and coarse-grainedlocking. Assuming that we have multiple shared resources, should we have one single lock on all the shared resources, or should we have a separate lock for every resource.

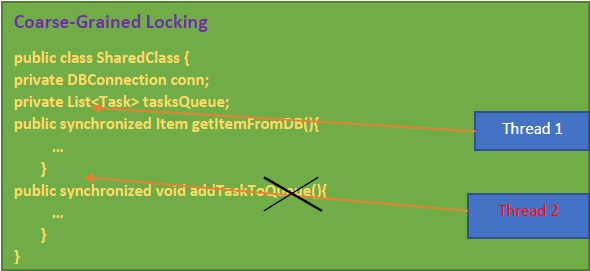

The advantages of a coarse-grainedstrategy is that we have only a single lock to concern about. And whenever any shared resources accessed, we just use that one lock. Let’s take an example of the below class.

So, in the above class, we have two shared resources. Each resource needs protection against concurrent access. We can just synchronize all the methods which would effectively create a single lock for the entire object. So, if we have one thread getting an item from the database other thread that tries to add a task to the queue would not be able to do so until the first thread gets the item from the database.

This strategy is definitely very simple to maintain i.e. the big advantage.However, it does feel a little bit of an overkill as those operations are not interfering with each other. So, why not allow to execute them concurrently. There is no right or wrong here, but it is just a decision we need to make here.

Also, now the price we might pay for the simplicity is in the worst case where only the one thread at a time can make progress. That would be the case if all those threads are accessing those shared resources. In real-time scenarios, our thread usually does more than just accessing those shared resources. So, there is still some concurrent work that the threads may perform. So, this is definitely a drawback of this strategy.

If we lock on every shared resource individually which is equivalent to creating a separate lock for every resource, then we would not have a more fine-grained locking strategy which allows for more parallelism and less contention. The problem we may run into when we have multiple locks is called deadlock.

Deadlock

A deadlock is a situation where everyone is trying to make progress but cannot because they are waiting for another party to make a move. This circular dependency where everyone says I will move if you move first is usually unrecoverable. So now let’s see where it can happen in our software.

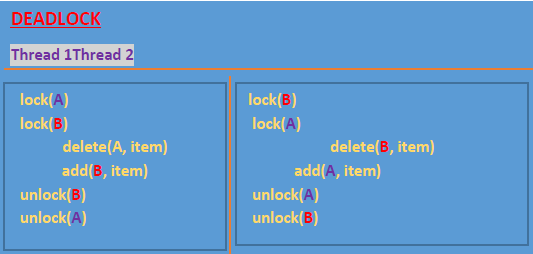

Let’s look at the scenario as mentioned below diagram:

So here in the above scenario, we have two threads. One wants to delete an item from resource A and add it to resource B. The second thread is trying to delete a item from resource B and add it to resource A. Those resources are shared. So, we maintain a separate lock for each of those resources. Now, let’s look at the following order of events.

Thread 1 is scheduled and locks resource A and then Thread 2 is scheduled and acquires a lock on the resource B. After that thread 2 is trying to acquire a lock on resource A. But that lock is already acquired by thread 1. So, thread 2 gets suspended until thread 1 releases that lock on resource A.

Then thread 1 is trying to make progress by trying to acquire a lock on resource B which is held by thread 2. So, now we are stuck. Thread 1 cannot move anywhere until thread 2 releases the lock on the resource B and thread 2 cannot advance because it is waiting for thread 1 to release the lock on resource A. So now we got into deadlock here.

Now, I will mention here how a deadlock looks like and how unrecoverable it is. Let’s formally describe the conditions that can lead to a deadlock. So, we can develop a systematic solution to avoid it.

- The first condition is that “Mutual Exclusion”. This means only one thread can have exclusive access to a resource at a given moment.

- The second condition is “Hold and Wait”. This means at least one thread is holding a resource and is waiting for another resource.

- The third condition is “Non-preemptive application”. This means that our resource cannot be released until the thread using it is done with using it. In other words, no thread can take away the other thread’s resources . It just has to wait until the thread,holding the resource, releases it.

- The 4th and last condition is “Circular wait”. This is the situation we found when our threadsare in deadlock. One thread was holding resource A and waiting for resource B to be released. While the other thread was holding resource B and waiting for resource A to be released.

So, if we have a situation where all if those above conditions are met, then a deadlock is possible, andit is only a matter of time.

So, now obvious solution to the deadlock problem is making sure that at least one of those conditions is not met. Then the deadlock just cannot happen. The easiest way to avoid a deadlock is avoiding our last condition that is avoiding the “Circular wait”. The way to do it is simply acquiring the locks on the sharedresources in the same order and stick to that order everywhere in the code.

In our last example(Diagram-3), we saw the locks on the resource A and B are acquired in a different order by both threads. So, if we take one of those threads and change that order to be the same as the other threads then there is no way to get into a dead lock. Because we will never have a circular dependency.

Notice in the above diagram that the order of releasing the locks is not important. So, enforcing a strict order on lock acquisition prevents deadlocks. This is the best solution for preventing the deadlock. And also, with a small number of locks in the program is very much doable but some complex applications may have a very large number of locks and maintaining the order there can be a difficult task. So, in this case we have to look for different techniques.

One of the techniques is using “watch dog” for deadlock detection. This watch dog can be implemented in many ways. There is also a way to check if a lock is already acquired by another thread before actually trying to acquire a lock and possibly getting suspended. This operation is called ”tryLock”. The synchronized keyword unfortunately does not allow a suspended thread to be interrupted nor does it have the tryLock operation. However other types of lock do allow this. I have described about them in below.

Advance Locking Anatomy in Multithreading

There is a new type of advance lock called a “ReentrantLock”. I will discuss here few of its operations including the “lockInterruptibly()” and “tryLock()”.I am going to explain also the use of the tryLock with practical example.

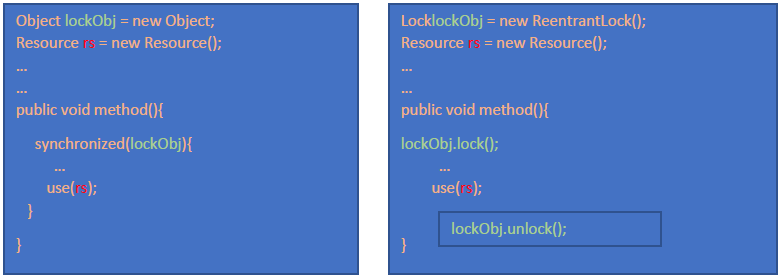

ReentrantLock: The reentrant lock is just like the synchronized keyword applied on an object. However, unlike the synchronized block, the reentrant lock requires both explicit locking and unlocking. So, now let’s have detail explanation.

In the above diagram, see how I have used the synchronized keyword working on the lock object. This happens in the beginning of the of the synchronized block and unlocking happens when the synchronized block ends.

Now, look at the above right-side diagram-6. The exact same logic but using the reentrant lock. Instead of creating an arbitrary object to use as a lock, I have created the reentrant lock object which implements the lock interface. Then when I want to protect a resource from concurrent access, I have called here the lock() method explicitly and when I want to unlock the lock, I have called the unlock() method explicitly.

Disadvantages: The obvious disadvantages of explicitly locking and unlocking is that after we have done using the shared resource, we may forget to unlock the lock object. This would leave the lock object locked forever. This is the source for bugs and deadlocks. But even if we remember to unlock the lock, we still have the problem of a method throwing an exception inside our critical section. Please see the below diagram of code.

In this case, we never even get the chance to call the unlock() method because as soon as the exception is thrown, then it would leave the method execution. The pattern that we need to follow to avoid any of these above problems is to surround the entire critical section inside a try block and put the unlocking of the lock object, in the finally section. See the below code:

This way we would guarantee that the unlock() method will be called no matter what happens in the critical section. This pattern also may allow us to unlock the lock object after the return statement which is impossible. For this extra complexity, we have got more control over the lock as well as lot of advanced operations.

First of all, we have few convenient methods that are good for testing.

- getQueuedThreads() method: This method will return the list of threads that are currently waiting to acquire the lock.

- getOwner() method: This method returns the current thread i.e. holding the lock.

- isHeldByCurrentThread() method: This method returns true if the current thread has already acquired the lock.

- isLocked() method: This method returns true if the lock is held by any thread at the moment.

Every production code needs to be thoroughly testedand if we are testing a complex multithreaded application, then above methods are very handy.

Another area where the reentrant lock shines is in the control over the lock’s fairness. The reentrant lock as well as the synchronized keyword that do not guaranteeany fairness by default. So, if we have many threads waiting to acquire a lock on a shared object, we might have a situation that one thread gets to acquire the lock multiple times while another thread may be starved and might get the lock only later.

If in your application, you need to guarantee such fairness, then every reentrant lock allows us to pass the true value into the constructor and make the lock fare”ReentrantLock(true)”. Please note that here maintaining the fairness comes with a cost and may actually reduce the throughput of the application because the lock acquisition might take longer. So, use the fairness flag only you actually need this.

Another powerful feature that the ReentrantLock provides us is the lockInterruptibly() method. Generally,if in a particular thread we try to acquire a lock object while another thread is already holding this lock, then the current thread will get suspended and will not wake up until the lock is released.

In this case, calling the interrupt() method to wake up the suspended thread would not do anything. But instead of calling the lock() method, we call the lockObject. lockInterruptibly() method while another thread is already holding the lock then we still can get out of this suspension. The lockInterruptibly()method forces us to surround it with a try/catch block and handle the interrupted exception.

So, if we want to stop the thread from waiting on the Lock and interrupted by calling the interrupt() method from another thread, then the suspended thread will wake up and will jump to the catch block. Inside the catch block we can do some clean up and shut down the thread properly. Please refer the below diagram to understand the example of lockInterruptibly() method:

Use cases of lockInterruptibly() method

- This lockInterruptibly() method is very useful if we are implementing a Watchdog . The text the deadlock threads for example and want to interrupt those threads and recover them from deadlock.

- Another use case is if we want to properly close the application, but we still have some threads suspended waiting for a lock. In that case, we can simply interrupt them which would wake them up and allow them to do some cleanupwork and will allow the application to finish.

The most powerful operation that ReentrantLock provides us is the tryLock() operation. The tryLock operation. The tryLock operation tries to acquire the lock just like the regular lock. And if the lock is available then the tryLock will simply acquire the lock and also return true.

If the lock is currently unavailable instead of blocking the thread, the method simply returns false and moves on to the next instruction.

Signature is boolean tryLock() and booleantryLock(long timeout, TimeUnit unit). It iseasier to understand how the tryLock works and how it is useful by comparing with the conventional lock() method side by side. Look at the below code:

So, here in the above code snippet, we have the same logic both on the right and left side. On the left we are using the regular lock() method and on the right, we are using the tryLock() method to acquire the lock. Now I will explain the scenario where the lock being available at the time, we are trying to acquire it.

- Scenario 1: Using Conventional lock(): When using the lock() method, the lock will get acquired preventing any other thread from entering the critical section and we will simply move to the next instruction inside the critical section where we can safely use the shared resource. Then once the thread is done using the shared resource, it would call the unlock() method to allow other threads to access the shared resource.

- Scenario 1: Using tryLock(): If we perform the same logic calling the tryLock() method, then this method will also result in acquiring the lock,but it will also return true to let us know that the lock was successfully acquired by the current thread. From that point on, the flow is similar. The thread would use the share resource and when it’s done then it will release the lock by calling the unlock() method.

- Scenario 2: Using Conventional lock(): The big difference is in the second scenario where the lock has already been acquired by another thread and is currently unavailable. In this scenario, when we use the regular lock() method, once the thread calls it then the thread will not be able to go any further and will get blocked until the lock becomes available again. Only when the lock is released by the lock’s current owner, the current thread will wake up, acquire the lock and will move to the next instruction.

- Scenario 2: Using tryLock(): If we use the tryLock() method when the lock is already held by another thread the method would not get blocked.Instead the tryLock() will return false immediately which would let us know that the lock does not belong to us and we should not use the shared resource. The thread then can jump to execute some different code in the else statement that does not need to use the shared resource. And in the future, it may come back to try and acquire the lock again.

Notice that under no circumstances the tryLock() method blocks.Regardless of the state of the lock, it always returns immediately. The tryLock() method of acquiring a lock has very important usecases in real-time applicationswhere suspending a thread on a lock is unacceptable. Examples of this might be in High speed trading Systems or the application having user interface thread. In all these examples, blocking and suspending a thread would lead to the application not being so responsive. But there is still neededto share resource with other non-real-time threads safely.

Race Condition

This is really a challenge that we face in our multi-threaded application. When we have multiple threads accessing a shared resource and at least one of those threads is modifying that shared resource and the timing or the order of the thread scheduling may cause incorrect results. This is called race condition.The core of the problem is the execution of non-atomic operations on a shared resource.

Now the solution for avoiding the race condition is:Identifying the race condition and protecting that critical section where that race condition is happening by including it in a synchronized block.

Overview of Semaphores

This is a thread synchronization tool. I am just giving a brief overview here of what a semaphore is and how is different than the Lock.

A semaphore is like a permit issuing and enforcing authority. It can be used to restrict the number of users to a particular resource or a group of resources. The semaphore can restrict any given number of users to a resource. An example for such kind of resource can be a parking lot. Let’s say we have 8 parking lots. So, at most 8 parking permits can be issued here for 8 cars at a given time.

When there are 8 cars in the lot, then any additional car that wants to acquire a permit to park will have to wait. When one or more cars leave the parking lot, then their parking permits can be acquired by other cars which can take their place or simply given back to the semaphore for future cars to arrive.

Semaphore semaphore = new Semaphore (NUMBER_OF_PERMITS); semaphore.acquire(NUMBER_OF_PERMITS); // 0 now available semaphore.acquire(); //Thread is blocked here

Everytime the thread calls the acquire method, it takes a permit if it’s available then it moves to the next instruction. A thread can acquire more than one permit at a time by passing the number of permits into the acquire() method. In the same way the release()method can take an argument and release more than one permit. Calling the acquire method on a semaphore thatdoes not have any permits left to spare will result in blocking the thread until the semaphore is released by another thread.

Actually, a semaphore is not a great choice for a lock because a semaphore is different from a lock in many ways. First semaphore does not have a notion of owner thread since many threads can acquire a permit. Also,the same thread can acquire a semaphore multiple time.

The Binary Semaphore is the one i.e. initialized with 1 is not going to be reentrant. If the same thread acquires it and then tries to acquire it again then that thread is stuck and would rely on other thread to release the semaphore in order for that thread to wake up.

Anotherdifference is that the semaphore can be released by any thread even a thread that has not acquired it. This might create a situation where the thread on the left would acquire the binary semaphore assuming it has the shared resources to itself where the thread on the right may accidently release the semaphore and consequently allow two thread into the critical section that was supposed to allow only one thread to inside it.

So, this is a bug, and this is programmer’s fault if it happens like this. But with any other lock, this situation is never possible. Because other Locks allow only the locking threads to unlock it. So, because of these differences, semaphore is a great choice for producer-consumer use cases.

Conclusion

Here you learn the overview of different types of locksavailable in Java development outsourcing and how to use the advance lockinginterfaces and methodsin your multithreaded application. Also, you have got a clear idea of Deadlock and Race conditions scenario in java multithreading and how to prevent them in your multithreaded application. Get more information about Java Development Services and solutions Aegis Infoways Provides.